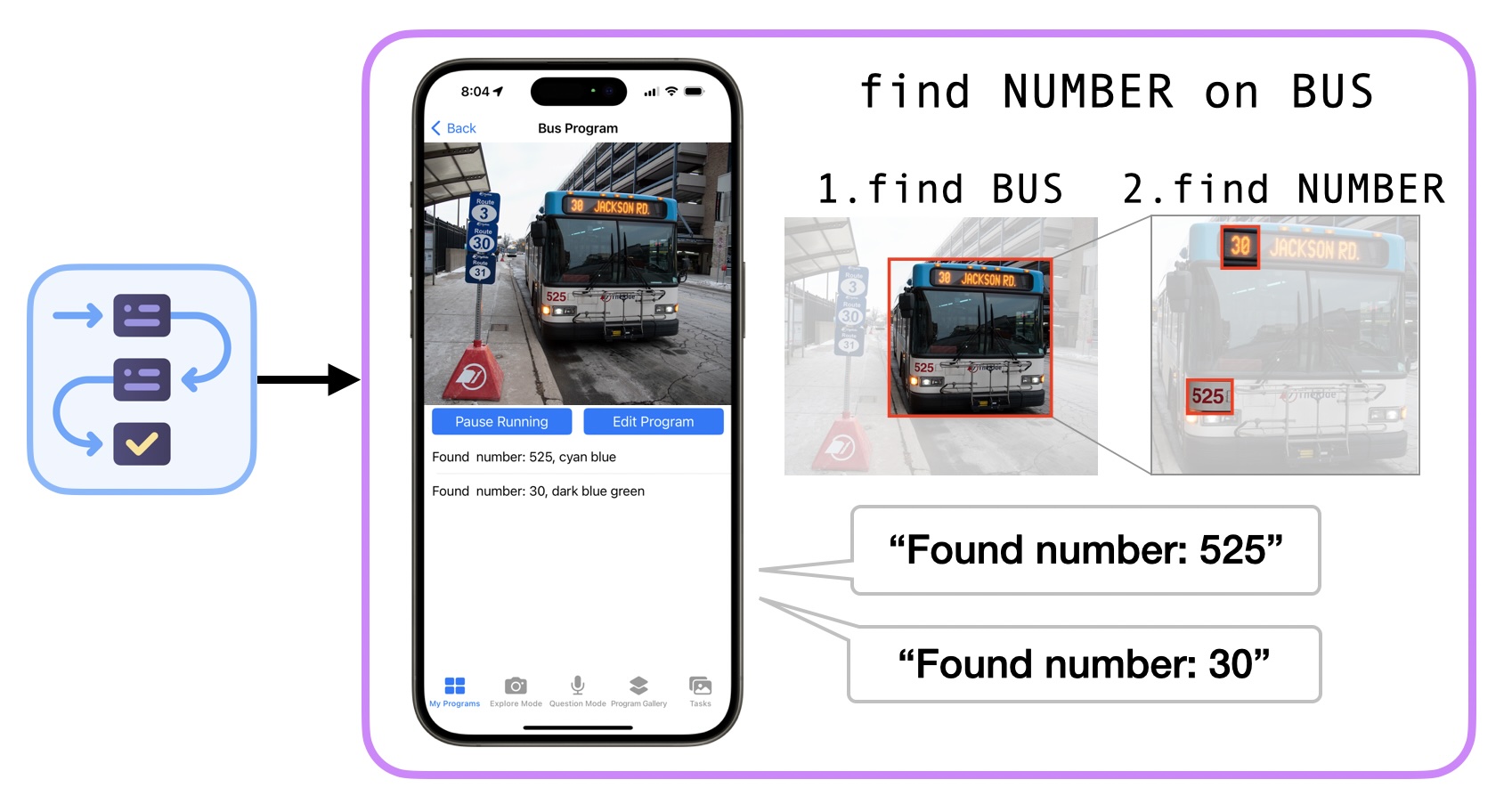

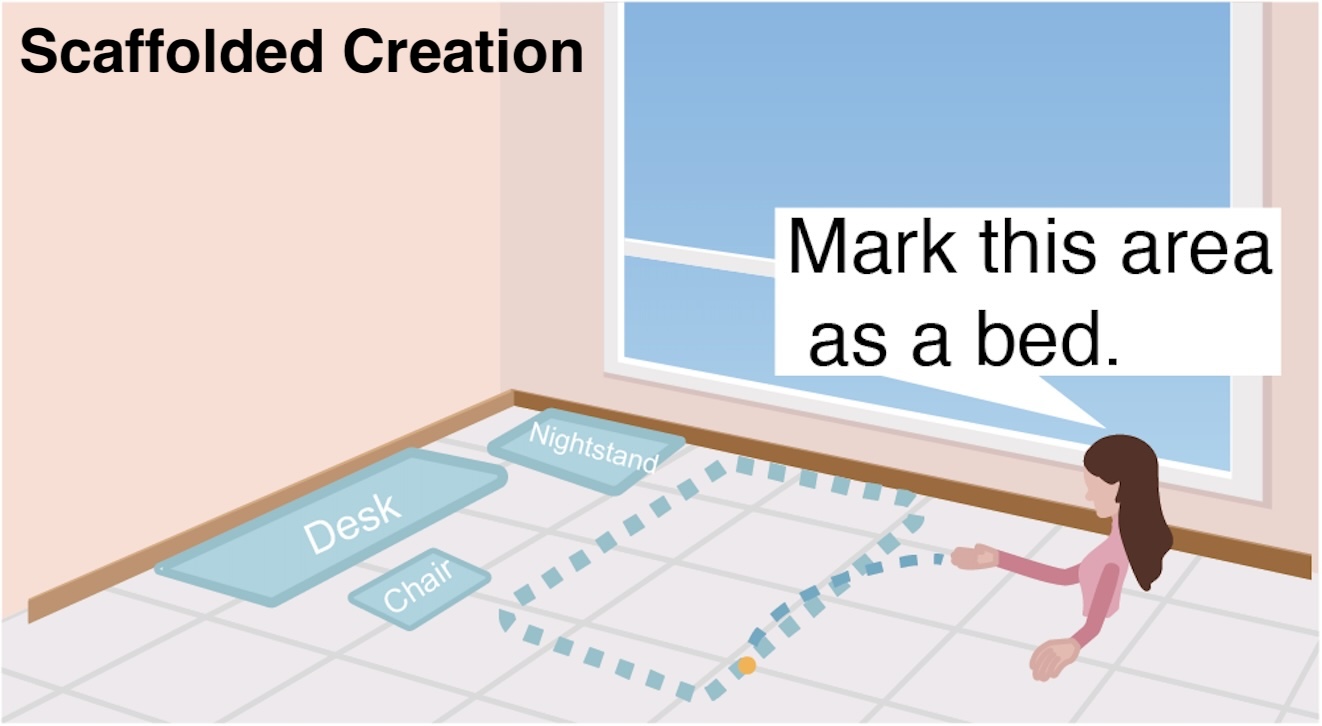

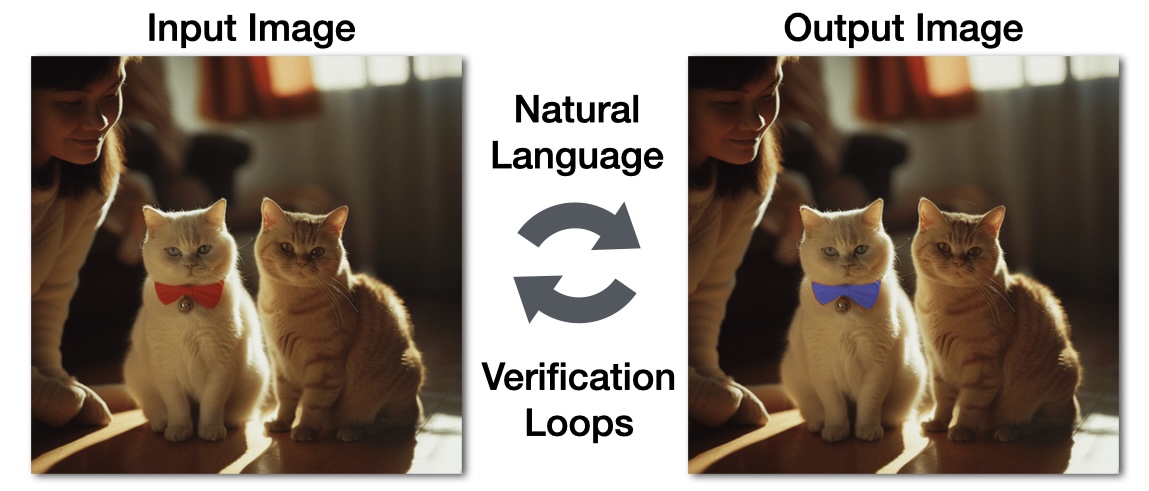

We design, develop, deploy, and study human-AI systems to support Personal Accessibility. We empower people to leverage their domain expertise to create and customize technology for themselves. By doing so, we highlight the unique needs of people (e.g., people with disabilities) and the importance of designing for a long-tail of needs, so that technologies can best support them.

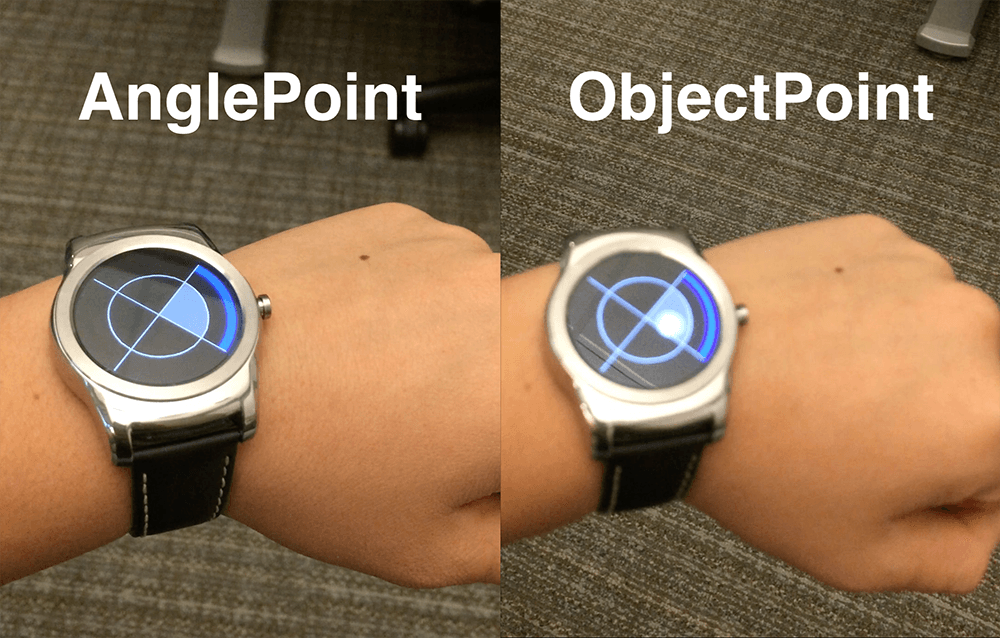

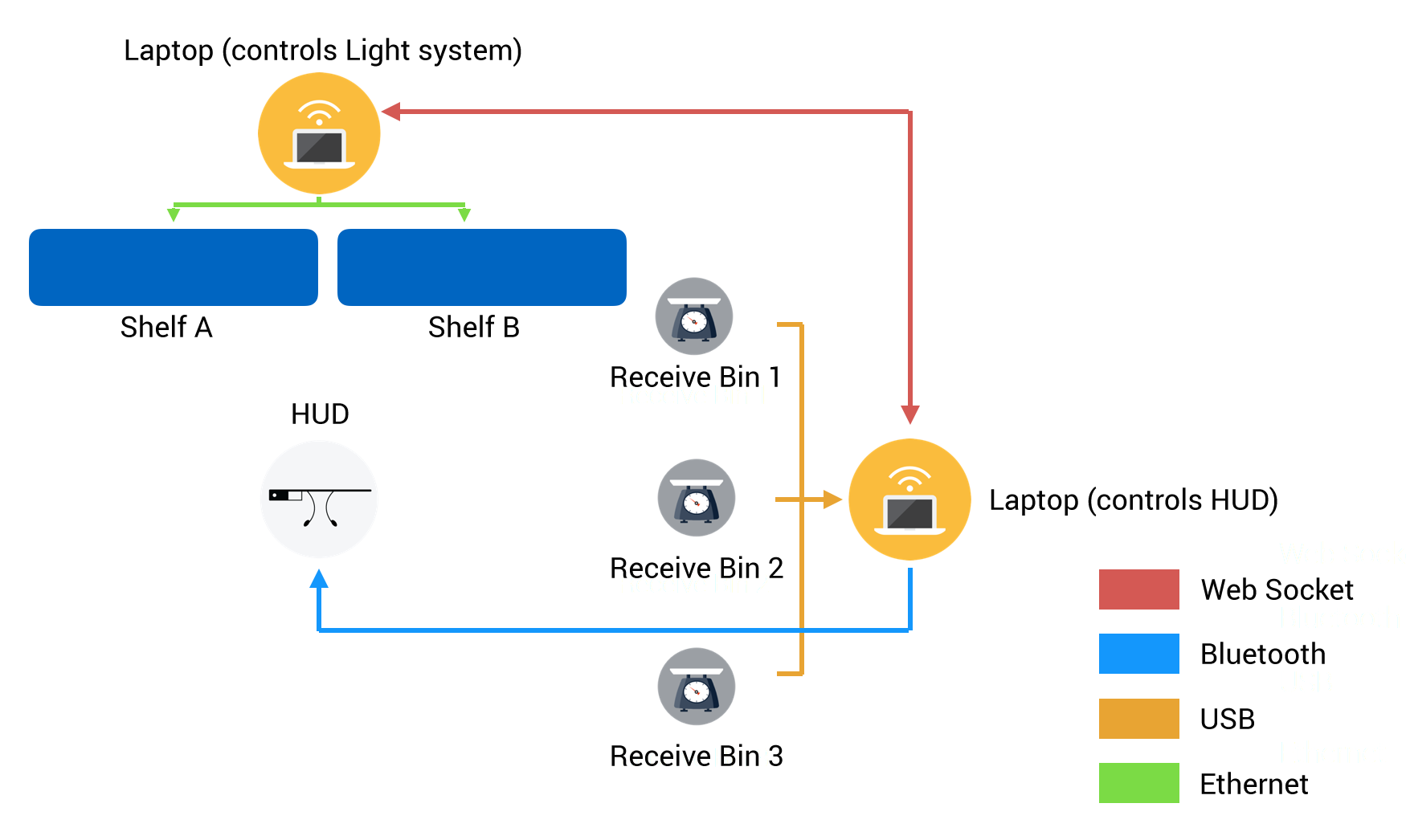

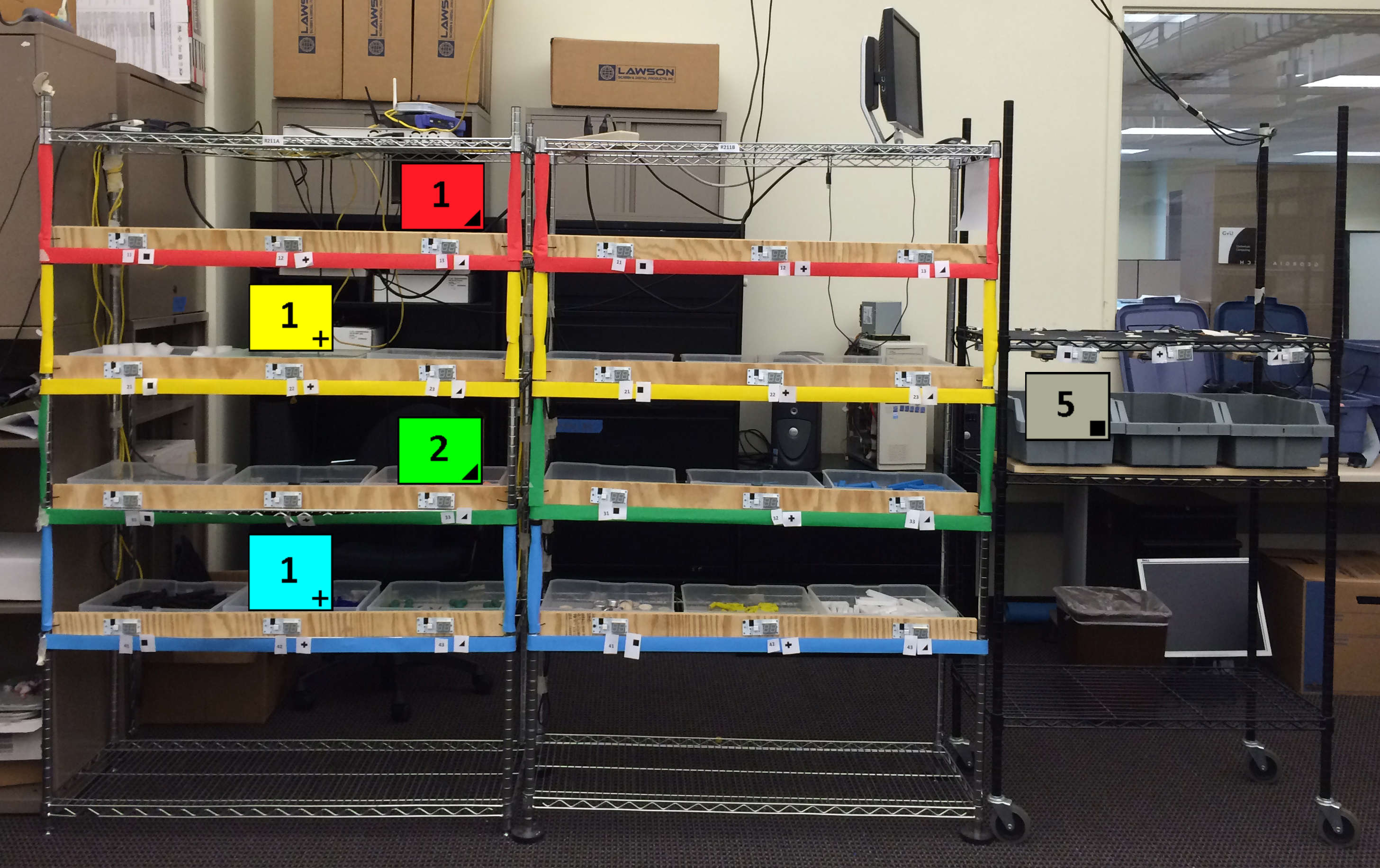

Anhong Guo is an Assistant Professor in Computer Science & Engineering at the University of Michigan, also affiliated with the School of Information. His research is at the intersection of HCI and AI, which leverages the synergy between human and machine intelligence to create interactive systems for accessibility, collaboration, and beyond. His research has received best paper, honorable mention, and artifact awards at CHI, UIST, ASSETS, and MobileHCI, and the 10-year impact award at ISWC on wearable technologies for warehouse order picking. He is a recipient of the NSF CAREER award, a Forbes' 30 Under 30 Scientist, a Google Research Scholar, an inaugural Snap Inc. Research Fellow, and a Swartz Innovation Fellow for Entrepreneurship. Anhong holds a Ph.D. in Human-Computer Interaction from Carnegie Mellon University, a Master’s in HCI from Georgia Tech, and a Bachelor's in Electronic Information Engineering from BUPT. He has also worked in the Ability and Intelligent User Experiences groups in Microsoft Research, the HCI group of Snap Research, and the Accessibility Engineering team at Google.

News

Publications